After seeing Doom Neural Upscale 2X by hidfan, I became interested in testing out “super resolution” techniques on the images found in Rainbow Six and Rogue Spear.

Super Resolution is the process of taking a smaller image and generating extra detail to output a larger resolution image without just blurring the smaller image. Although there are many varied techniques to achieve the results, in this article I will be using an open source trained network, ESRGAN (Enhanced SRGAN), and an image upscaling service called LetsEnhance.io.

Goals / Requirements

My goal is to upscale textures from a 20 year old game, therefore the process needs to work on extremely small textures and produce visually appealing results. There are a lot of textures to process so I’d like to avoid any hand tweaking. My goals boil down to this list:

- High resolution textures from existing data

- Low noise, low artifact results

- A fully automated with no human intervention on a per image basis.

- Pre-processing and post-processing of images is ok, as long as the process can be automated and applied to all images.

- Services with no possibility of API access are a no-go.

Initial testing

I ran into ESRGAN (Enhanced SRGAN) after reading a DSOGaming article on the Morrowind Enhanced Textures mod. The results looked promising, and since it was open source I could run it locally and not worry about API quotas or high costs. It was a natural first choice.

First I ran all the textures from the first mission of Rainbow Six through ESRGAN using the model RRDB_ESRGAN_x4. The results were mixed. Undoubtedly the textures were higher resolution and sharper, but overall there was too much harsh detail added and it had a very strong appearance of being over-sharpened.

I ran the same textures through again using the model RRDB_PSNR_x4. These textures appeared over-smoothed, and didn’t add the detail that the previous model had achieved. While there were some improvements in texture detail, there was still a lot of artifacts introduced. Overall the best case of these results were not substantially better than bilinear scaling in realtime, and the worst case was significant visual artifacting. The added benefits do not outweigh the artifacting issues.

More details on the differences between the 2 trained models are available in the paper ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. The paper also discusses mixing the results of the 2 models to reduce artifacting, which I explore a little later.

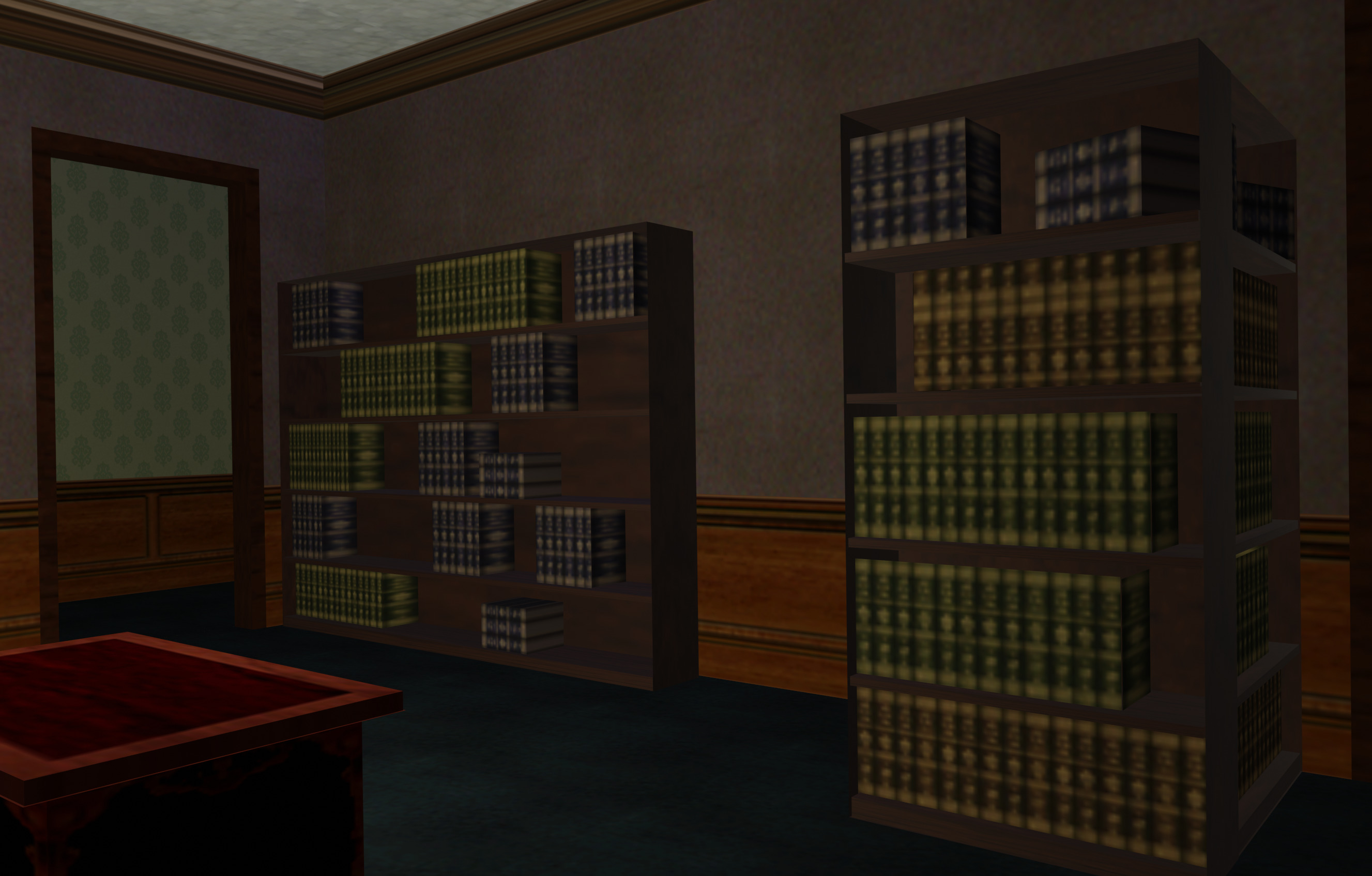

Original Textures

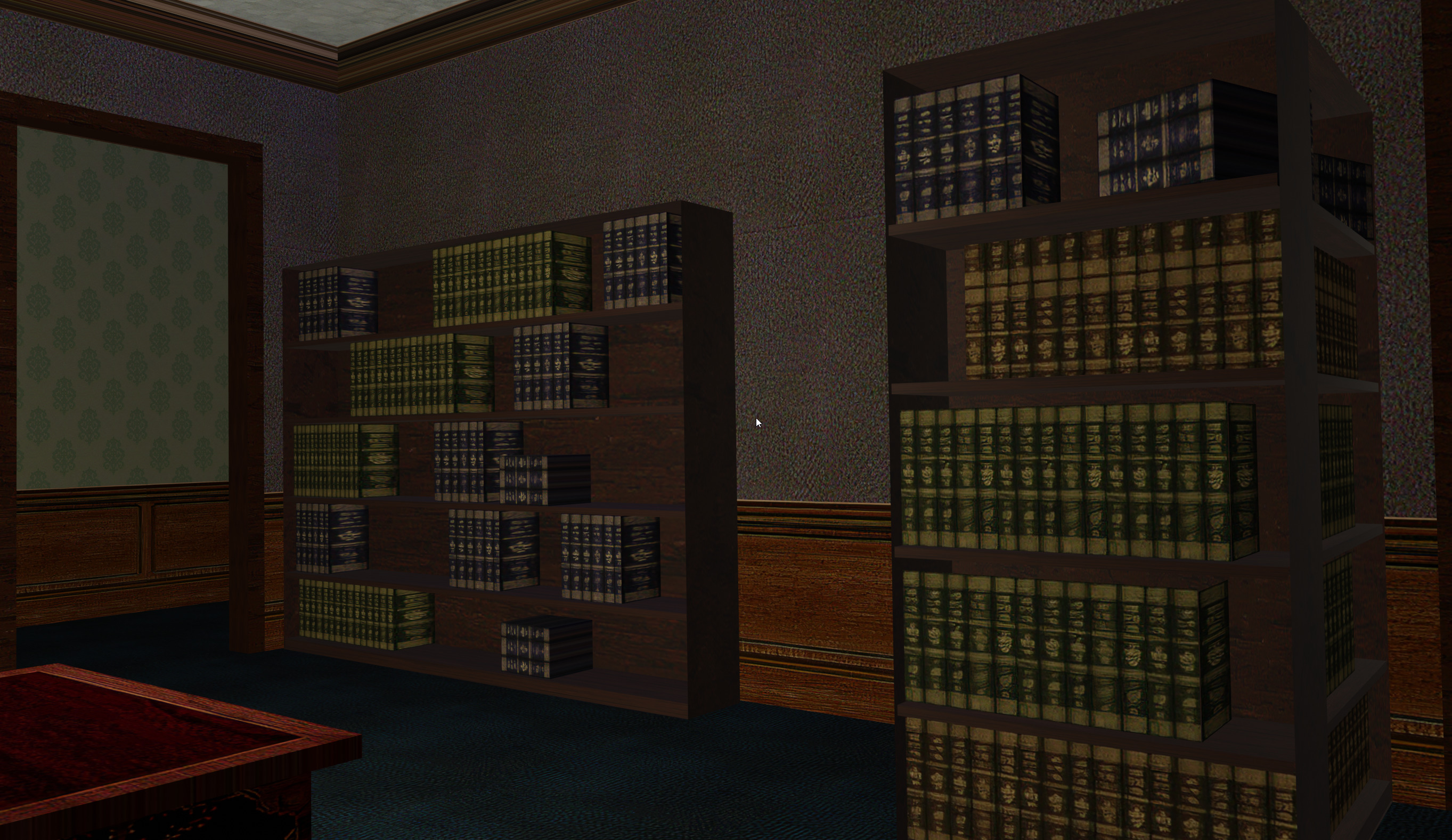

RRDB_ESRGAN_x4 Textures

RRDB_ESRGAN_x4 Textures

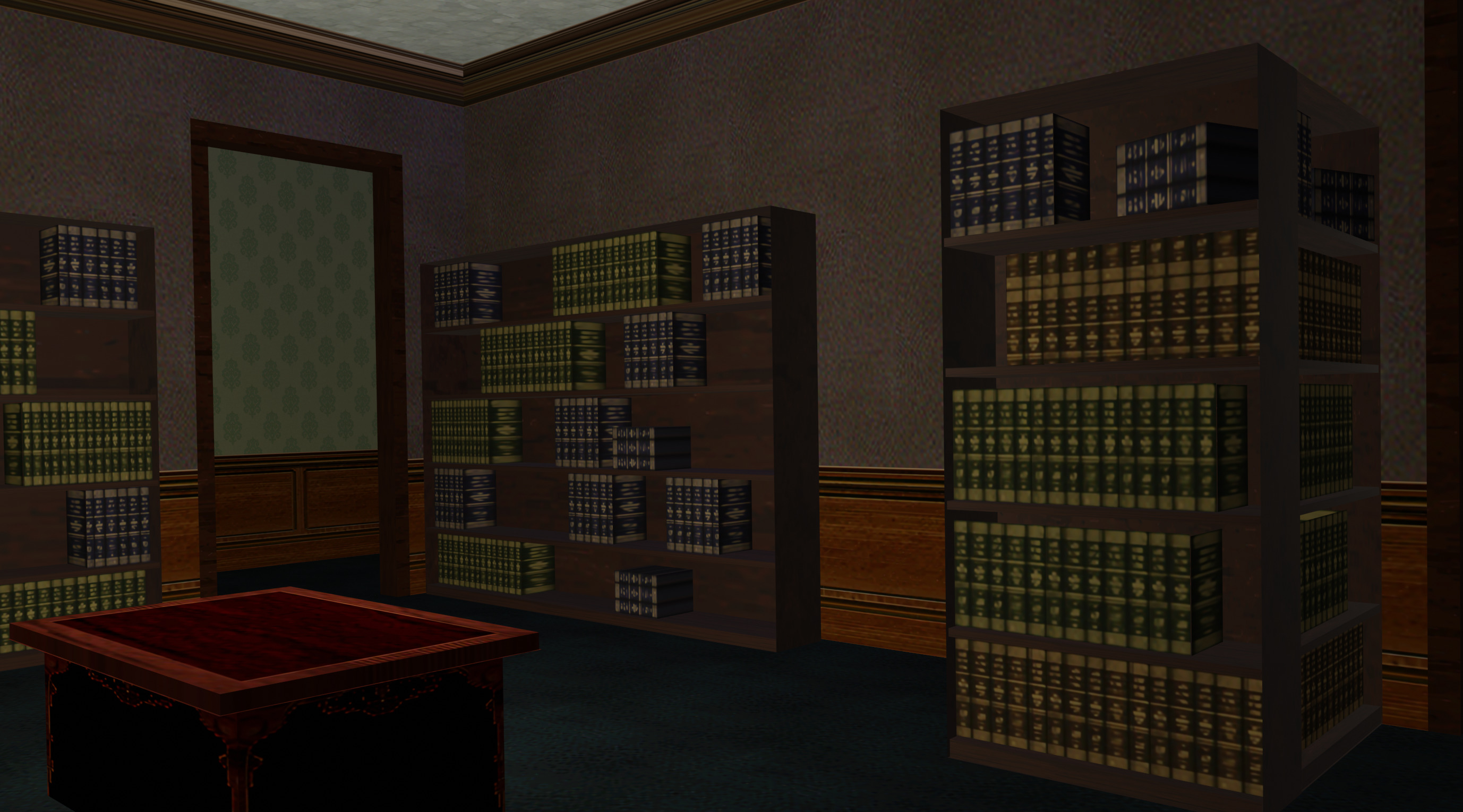

RRDB_PSNR_x4 Textures

RRDB_PSNR_x4 Textures

As you can see in these screenshots using RRDB_ESRGAN_x4 the wall textures appear quite harsh, however the books have come up about as well as could be expected using RRDB_ESRGAN_x4. The improvements seen with RRDB_PSNR_x4 are better though not earth-shattering.

Original Textures

RRDB_ESRGAN_x4 Textures

RRDB_ESRGAN_x4 Textures

RRDB_PSNR_x4 Textures

RRDB_PSNR_x4 Textures

From these shots, take note of the bricks on the fence, the bricks on the embassy, and the tiles on the driveway. Certainly these bricks have come up extremely well, however the tiles have an over-exaggerated streak-like pattern. With the PSNR model the streaking remains. While the textures gain a little sharpness, it’s not enough to justify the artifacts. The textures that were generated by the RRDB_ESRGAN_x4 model also appear quite a bit brighter.

Original Textures

RRDB_ESRGAN_x4 Textures

RRDB_ESRGAN_x4 Textures

RRDB_PSNR_x4 Textures

RRDB_PSNR_x4 Textures

In these comparisons look at the buildings in the background, the hedges, flowerbed, and the concrete and grass around the pond. The buildings are affected by significant artifacting. The concrete and grass around the pond gain noticeable sharpness improvement in both models. The hedgerow sees another decent boost in sharpness. The flowerbed once again exhibits an over-sharpened appearance with both models.

Hybrid approach

Taking a page out of hidfans’ book, I tried averaging results from a few sources. Since I don’t have API access to letsenhance.io I chose an image that would demonstrate a variety of scenarios and see how it performed. I used M13_Loading.png which is a 640x480 in-game screenshot which is displayed when loading the mission (who would have guessed?). I ran this through:

- letsenhance.io

- ESRGAN with pre-trained model: RRDB_ESRGAN_x4

- ESRGAN with pre-trained model: RRDB_PSNR_x4

Once I had all three results, I opened these in photoshop and stacked them to get the mean color. This did help to smooth out some of artifacts, though it was far from perfect. I tried downsampling the images which did help with noise. Unfortunately there was still a lot of artifacting including halos, strange lines and striations. In particular look at the noise introduced in the sky and clouds.

Original Image

Merged and Downsampled Result

Color Dithering issues

At this point I realised a lot of the noise or strange artifacts were around areas where a dithering pattern was visible. This is because the source textures are extracted from the game where all textures and images are stored as 16bit images, this means that the color channels Red, Green and Blue store colors in 5, 6 and 5 bit precision respectively. In some cases, an alpha channel is also stored giving just 4 bits per channel. To prevent this loss of precision causing extreme color-banding and give the appearance of higher color precision the textures have been encoded with a dithering pattern during the conversion which helps hide this to the human eye. Unfortunately the neural networks are reading these patterns as detail.

To attempt to alleviate this I tried manually applying a denoising filter in Photoshop to the source image. I tried to remove as much of the dithering as possible while retaining detail and without over-sharpening or over-smoothing.

I then followed the same process.

- Run through all upscalers/models

- Average in photoshop

- Downsample using “Bicubic (smooth gradients)”

The results were improved, especially around areas with a lot of dithering, however there were a large number of artifacts remaining. A slightly stronger de-noise filter would smooth out the remainder of the issues around dithering. It’s probable other artifacts will be lessened with more pre-processing.

Denoised Image

Merged and Downsampled Result

Conclusion

The results achieved by hidfan on Doom Neural Upscale 2X are amazing. I’d really like to achieve a similar level of success in upscaling textures for Rainbow Six and Rogue Spear. The current approaches I’ve tried are extremely promising, they still aren’t quite meeting my needs however. In comparison to the Morrowind Enhanced Textures mod, the source textures in Rainbow Six are extremely low resolution and cover large portions of the screen. This means small imperfections are significantly more noticeable, which I suspect is the reason I’m not getting results that are as acceptable.

Only being able to achieve a 4x upscale didn’t allow much wiggle room to hide artifacts with downsampling and averaging. Ideally finding an approach that allows an 8x upscale would benefit a lot. However the larger you upscale, the more data has to be inferred. This leads to more chance for artifacts. I’m confident that using different techniques that perhaps have been trained on larger data sets will yield better results.

Issues surrounding dithering induced noise caused significant amounts of artifacts which would require a lot of hand tweaking. A quick test with denoising provided very promising results. A more robust pre-processing stage will alleviate this problem and possibly other problems. This will require fine tuning to ensure maximum detail is still preserved.

The upscaling processes themselves introduced significant artifacts so a post-processing stage will definitely be needed. Using multiple services or networks and averaging the results definitely improved the results. I suspect averaging too many results will result in a blurry image and best results will probably be obtained by selecting the best 2-3 results. Halos and bright spots were common artifacts. I’d like to explore using the source image in a post-processing stage to remove hot pixels.

In the future I’d like to evaluate more software, other neural networks, and other techniques. Of most interest are services and software that can achieve higher than 4x upscaling, allowing more wiggle room to downsample and hide artifacts. I’ve applied for access to NVIDIA Gameworks: Materials & Textures, and when I get a chance I’ll try out Topaz AI Gigapixel. There are also numerous research papers available which have shared their trained model and code on github, and I will keep an eye out for promising ones.

After reading through the early thread on Doom Neural Upscale it appears that translucency was a problem for these techniques. I’ll need to pay special attention to these, or copy translucency and masking values from the source files and upscale the alpha channel or color masks the old fashioned way.

It’s still early days in my project so I have plenty of time to let these things mature, and more importantly, experiment more!

Share